ChatGPT Prompt Engineering: A Complete Guide For Beginners

Hello there, fellow AI enthusiasts and tech-savvy folks!

Today, I’m excited to guide you through the fascinating world of ChatGPT prompt engineering and how it can help you unleash the full potential of these chatbots and other Large Language Models (LLMs).

You might be thinking, “Do I need to be a coding genius for this?” Well, fret not because the answer is a resounding no. We’re going to keep things simple and jargon-free, so no coding background is necessary.

In the article, we will explore the ins and outs of prompt engineering. Why, you ask? It’s a skill that’s in high demand, with some companies reportedly dishing out hefty salaries, reaching up to $335,000 a year (according to Bloomberg), for professionals who master this craft.

We’ll discover its ins and outs. We’ll start with a quick peek into the fascinating realm of AI, followed by a closer examination of the famous and powerful large language model, ChatGPT.

Along the way, we’ll discuss the prompt engineering mindset, best practices, IO and CoT prompting, and AI hallucinations, and, to wrap it up, we’ll provide a brief introduction to ChatGPT.

What you’re about to learn

What is prompt engineering?

Let’s kick things off by delving into what prompt engineering is all about.

Prompt engineering refines prompts for optimal human-AI interaction for effective AI performance.

It’s a gig that emerged alongside the rise of artificial intelligence. It revolves around crafting, fine-tuning, and systematically optimizing prompts.

The goal? To perfect the interaction between us humans and AI to the max. But it doesn’t stop there – prompt engineers also have the task of keeping tabs on these prompts over time, ensuring they stay effective as AI continues to evolve.

Keeping a fresh and updated prompt library is a must for these engineers, along with sharing their insights and being the go-to folks in this field.

Now, you might be wondering, why we even need this prompt engineering thing, and how it sprouted from the realm of AI?

But b.fore we dive into that, let’s make sure we’re all on the same page about AI itself. Artificial intelligence is like a mimicry of human thought processes carried out by machines.

I say mimicry because AI isn’t sentient – at least, not just yet. It can’t think for itself, despite how clever it might appear. Often, when we toss around the term “AI,” we’re basically talking about something called machine learning.

Machine learning works by munching on huge piles of training data, dissecting it for patterns and connections, and then using that insight to predict stuff based on the data it’s seen.

Imagine we’re spoon-feeding our AI model some data, telling it that if a paragraph looks a certain way with a specific title, it should belong to a specific category.

With a dash of code magic, we can train our AI to make pretty accurate guesses about what future paragraphs are all about.

As of now, the world of AI is sprinting ahead with impressive strides. Thanks to vast pools of training data and some seriously talented developers, today’s AI tech can whip up lifelike text responses, craft images, compose music, and dabble in various other creative endeavors. It’s a thrilling time to be part of this ever-evolving AI world.

Importance of prompt engineering

Why is machine learning important? And why does prompt engineering matter? With the rapid growth of AI, even its creators find it hard to control and predict its outcomes.

To grasp this, consider asking an AI chatbot a simple math question like “What is 1 + 1” You’d expect a clear answer: 2.

However, imagine you’re a student learning English.

I’m about to demonstrate how different responses can shape your learning experience based on the prompts given.

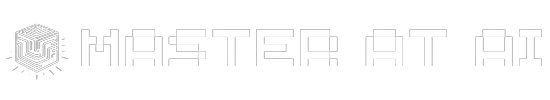

For this example, I’m using ChatGPT’s GPT-3.5 model. Let’s begin with the fundamentals. If you were to type, “correct the given text” and input a poorly written text like this:

“Today was great in the world for me. I went to Disneyland with my mom. It could have been better though if it wasn’t raining.”

The English learner gets a better sentence, but there’s room for improvement, and the learner might feel a bit lost.

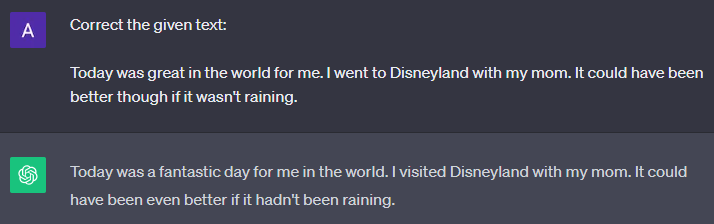

What if the learners could receive tailored responses from a teacher who understands their interests, keeping them engaged?

Well, with the right prompts, AI can make this happen. Let’s go ahead and craft a prompt to achieve this.

I’ll use this: “I want you to act as my spoken English teacher. I’ll speak to you in English, and you’ll reply to me in English to help me practice. Keep my reply neat within 100 words. Correct my grammar and typos, and ask a question in your reply. I want you to ask me a question first. Let’s begin!”

Now, this interaction becomes dynamic. The AI asks you questions, corrects your mistakes, and guides your learning journey.

It’s an interactive experience, that transforms your learning process. All thanks to the carefully crafted prompt we provided.

You could even ask the AI to correct factual errors for a more enriching learning experience.

Pretty amazing, isn’t it? We’ll explore more of these concepts soon, but let’s start with the basics for now.

Linguistics

Linguistics, in a nutshell, explores the intricate world of language.

It delves into a wide range of aspects, from the way we produce and perceive speech sounds (phonetics) to the study of sound patterns and changes (phonology).

Morphology is all about understanding how words are structured, while syntax focuses on the arrangement of words in sentences.

Semantics examines the meaning of words and phrases, and pragmatics looks at how language functions within different contexts.

Historical linguistics explores how languages change over time, while sociolinguistics investigates the connection between language and society.

Computational linguistics is the study of how computers can handle human language, and psycholinguistics delves into how humans learn and use language.

And much more…

It’s quite a diverse field, isn’t it?

Now, you might wonder why linguistics is so important for engineering, specifically when it comes to crafting prompts.

Well, the key lies in understanding the nuances of language and how it’s employed in various situations.

By grasping these intricacies, we can create more effective prompts. Moreover, using a standardized grammar and language structure that’s commonly accepted ensures that AI systems generate the most accurate results.

Since these systems are typically trained on vast amounts of data that adhere to universal language standards, maintaining this standardization is absolutely crucial.

Language models

Think of language models as the key to a world where computers can understand and produce human language effortlessly.

In this fantastic realm, machines chat, craft stories, and even compose poetry with ease, thanks to the magic of language models.

These models are like digital wizards, capable of comprehending and generating text that resembles human language.

So, what’s the secret behind this wizardry? It all starts with a language model ingesting a vast amount of written content, from books and articles to websites.

It learns how humans use language, becoming a master linguist in the process, adept at conversing, maintaining grammar, and adhering to various writing styles.

To break it down further, when you input a sentence into a language model, it dissects the sentence, analyzing word order, meanings, and the way everything connects.

After this analysis, it generates a coherent prediction or continuation of the sentence, making it seem like it was crafted by a human.

Picture it as a conversation with a digital buddy – you ask a question, and it provides a well-thought-out response. You share a joke, and it responds with a clever remark. It’s like having a language expert at your disposal, always ready for a chat.

Now, you might be curious about where you can find these language models in action. They’re all around, from your smartphone’s virtual assistants to customer service chatbots and even in the creative world of writing.

They help us find information, offer suggestions, and create content. But here’s the thing to remember: these language models are a blend of human creativity and the power of algorithms, harnessing the strengths of both worlds.

History of language models

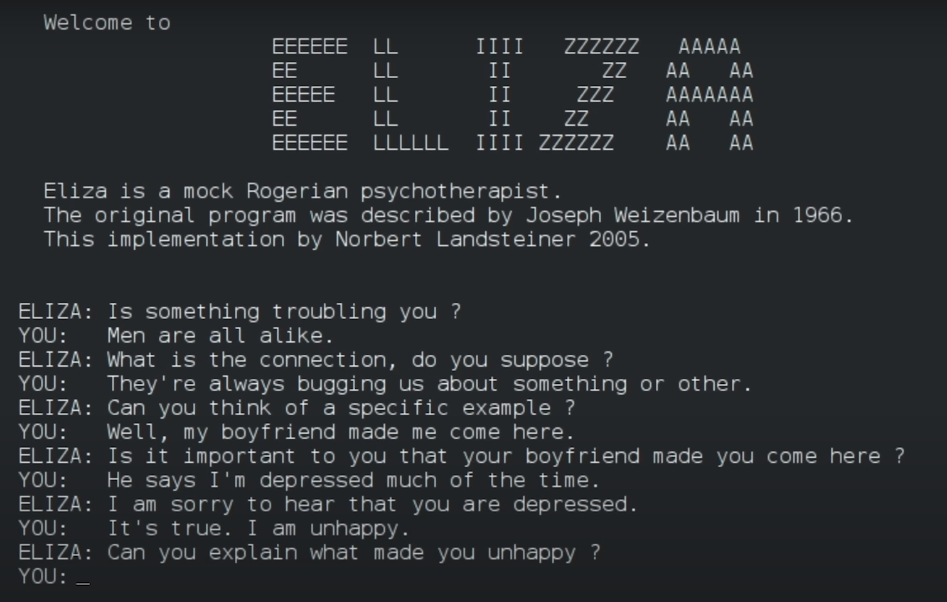

Let’s dive into the intriguing history of language models, starting with 1964 and the birth of the first AI, Eliza.

It was a pioneering natural language processing computer program developed at MIT by Joseph Weizenbaum between 1964 and 1966.

It was designed to simulate conversations with humans, particularly resembling the role of a Rogerian psychotherapist who listens attentively and asks insightful questions to help individuals explore their thoughts and emotions.

Eliza’s magic lay in its pattern-matching capability. It had an extensive collection of predefined patterns, each linked to specific responses, like enchanted spells enabling Eliza to comprehend and reply to human language.

In an interaction with Eliza, it would meticulously analyze your input, searching for patterns and keywords.

Your words were transformed into a sequence of symbols, which Eliza would then match against its stored patterns.

When a pattern was identified, Eliza would work its charm by converting your words into a question or statement designed to encourage deeper introspection.

For instance, if you write “I am sad”, Eliza would detect the pattern and respond with a question like, “Why do you think you feel sad?” It encouraged reflection and introspection, akin to the support of a caring therapist.

However, there’s an intriguing twist here: Eliza didn’t genuinely comprehend your words. It was a clever facade.

Eliza relied on pattern matching and ingenious programming to create the illusion of understanding while essentially following a set of predefined rules.

Nevertheless, people found themselves captivated by its conversational abilities. They felt heard and understood, even though they were aware they were conversing with a machine.

It was like having a digital confidant always ready to listen and provide gentle guidance. Interestingly, Weizenbaum, Eliza’s creator, initially designed the program to explore human-machine communication.

But was taken aback when individuals, including his secretary, ascribed human-like emotions to the computer program.

But that’s a discussion in itself.

Eliza had a profound impact, sparking interest and research in the realm of natural language processing, paving the way for more advanced systems capable of genuine human language understanding and generation.

This marked the humble beginning of the remarkable journey into the world of conversational AI.

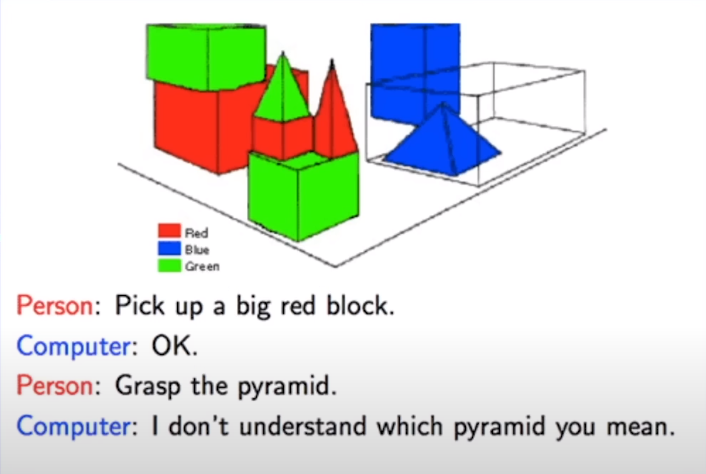

Fast forward to the 1970s, when we encountered ‘SHRDLU’, a program that could comprehend simple commands and interact with a virtual world of blocks.

While not precisely a language model, it laid the groundwork for machines understanding human language.

The real era of language models took off around 2010 when the potential of deep learning and neural networks came to the forefront. Enter GPT, or generative pre-trained transformer, a powerful entity poised to revolutionize the world of language.

In 2018, OpenAI introduced a rapidly evolving version of GPT, trained on huge amount of text data, encompassing knowledge from books, articles, and a substantial chunk of the internet.

GPT-1 was a glimpse of what was to come, an impressive language model dwarfed by its successors we use today.

Over time, the saga continued with the introduction of GPT-2 in 2019, followed by GPT-3 in 2020.

GPT-3 emerged as a titan among language models, equipped with an enormous number of parameters, exceeding 175 billion to be precise.

It captivated the world with its unparalleled capacity to understand, respond, and even generate creative pieces of writing.

The advent of GPT-3 marked a significant turning point in the landscape of language models and AI. As of the present, we now have GPT-3.5 and GPT-4, which are trained on virtually the entire internet, rather than outdated large datasets.

Additionally, there’s Google’s PaLM and many other innovations. It seems like we’re only scratching the surface in the realm of language models and AI.

Hence, mastering the art of harnessing this data through prompt engineering is a wise move for anyone in the present day.

Prompt Engineering Mindset

When you’re thinking about coming up with effective prompts, it’s crucial to approach it with the right mindset.

Essentially, your goal is to craft a single prompt that yields the desired result without wasting time and tokens on numerous attempts.

This approach is somewhat akin to how we’ve improved our Googling skills over the past five years.

Back then, we might have taken multiple tries to find the information we wanted, but now, we’ve become more adept at entering the right search terms from the get-go, saving time.

A similar mindset can be applied to prompt engineering.

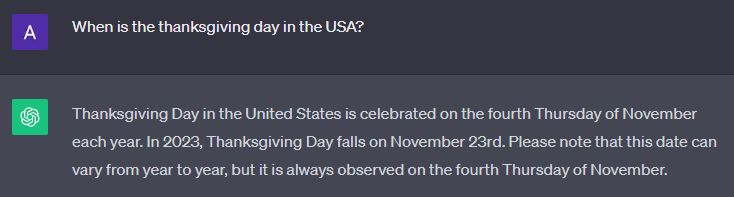

Let’s take a quick look at how to use ChatGPT powered by OpenAI, specifically the GPT-3.5 model.

I’ll be demonstrating examples using ChatGPT.

To follow along or understand how to use the platform, you can head over to openai.com and sign up for an account.

If you’ve already signed up, just log in, and that will take you to the platform where you can choose your preferred model.

By default, it is set on the GPT-3.5 model.

Excellent! You can see your previous chat interactions. If you want to start a new conversation, simply click the “New Chat” button.

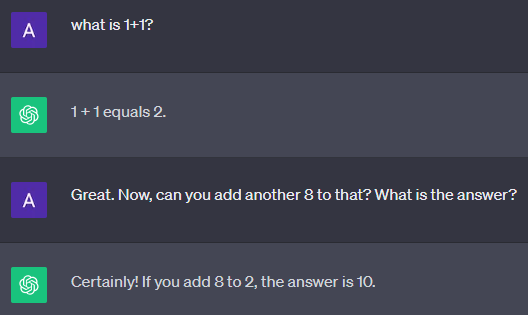

For instance, you can ask a question like “What is 1+1?” and hit “Send” to get a response. You’re now interacting with ChatGPT.

You can also continue the conversation by building on what was previously discussed. For example, you can ask, “Great. Now, can you add another 8 to that? What is the answer?” And it will consider the context.

This is just a brief introduction, and we’ll delve deeper in a minute.

To create a new chat, click the “New Chat” button, and if you want to delete the old one, use the “Delete” button. It will automatically bring up a new chat.

Wonderful! You can also use OpenAI’s API, which allows you to build your own applications. To use the API, go to the API references, get an API key, and create one. This key enables you to interact with the OpenAI API and build custom applications similar to what we’ve just seen.

Anyways, let’s continue using ChatGPT.

Tokens

Tokens, in this context, refer to chunks of text, which can be as short as a single character or as long as a word.

This means that GPT-3.5 can analyze and understand up to 4,000 tokens (around 3000 words) of text at once, making it highly proficient at comprehending lengthy passages, long conversations, and complex documents.

The generous context window is an essential feature for tasks like text generation, translation, summarization, and more.

It allows GPT-3.5 to maintain context and coherence in longer documents, improving its overall performance and making it more versatile for various applications.

In conclusion, GPT-3.5’s substantial context window and tokens are pivotal elements that set it apart in the field of natural language processing.

These features enable it to tackle complex language-related tasks with unparalleled finesse, making it a powerful tool for developers and researchers exploring the frontiers of AI and NLP.

Basic prompt engineering practices

The concept of prompt engineering may seem straightforward, but there’s more to it than meets the eye.

It’s not just about crafting a single sentence, like the “correct the given text” example we’ve seen earlier.

Creating powerful prompts involves considering several key factors. Here are some tips for composing effective prompts:

1. Clarity is Key

Ensure your instructions are clear and detailed in your query. Avoid assuming that the AI understands the specifics of what you’re asking.

For instance, instead of a vague question like “When is the election?” which leaves room for misinterpretation.

Specify the context, such as “When is the next presidential election for France?”

Here, I’m being more specific about the context.

This precision saves time and avoids potential frustration.

Let’s take another example.

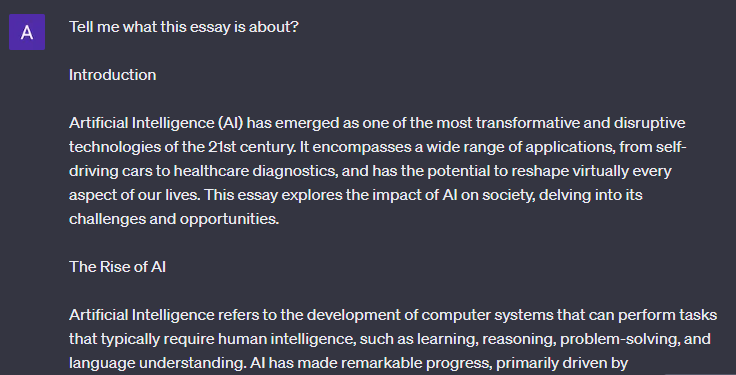

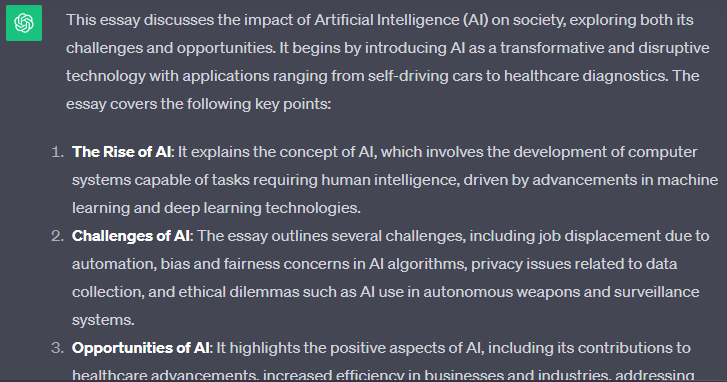

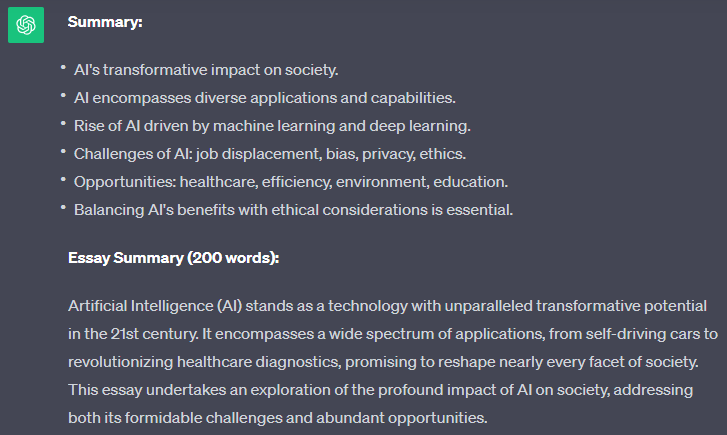

It gave me a summary of the article as it thinks best. But this summary is still very long (I couldn’t take the entire summary in one screenshot though) and I don’t wanna read the entire text.

I want a more specific and more detailed summary of the essay. So let’s try another prompt.

Now, it describes the essay in bullet points as instructed in the prompt. I can’t capture the entire text in one screenshot but I hope you got an idea of how the prompt affects the results.

2. Personification

Now, let’s talk about adopting a ‘Personification’. When working with writing prompts, it can be quite useful to create a persona.

This essentially means that you’re requesting the AI to respond as if it were a specific character.

Just like the example we saw earlier with the English language teacher, using a persona in prompt engineering can ensure that the language model’s output aligns well with the needs and preferences of the intended audience.

This makes it a valuable tool for developing effective language models.

Let’s explore some examples of adopting a persona.

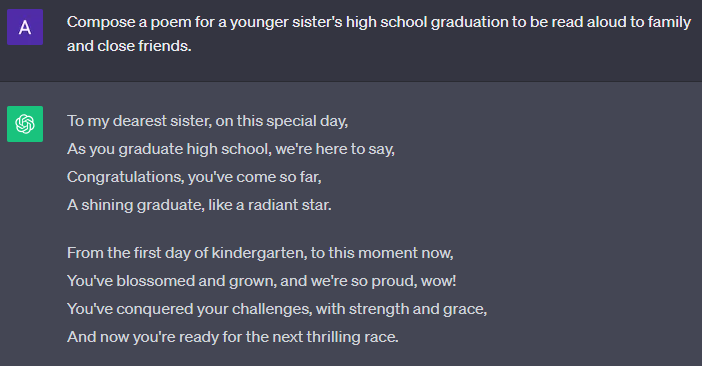

Consider this prompt: “Compose a poem for a younger sister’s high school graduation to be read aloud to family and close friends.“

Now, let’s run this prompt and see what the AI generates.

The poem is decent, maybe even better than what I could write. It’s somewhat generic, though, but that might be what you’re looking for.

However, I believe we can aim for something even better.

Let’s try something else,

Now, I’m going to use a persona in the prompt. I’ll specify who I’m writing as.

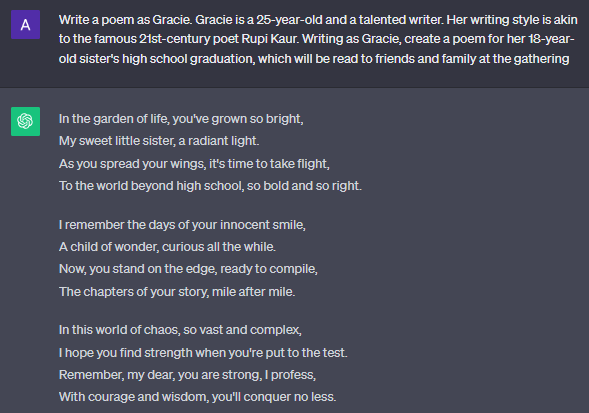

Here’s the new prompt:

“Write a poem as Gracie. Gracie is a 25-year-old and a talented writer. Her writing style is akin to the famous 21st-century poet Rupi Kaur. Writing as Gracie, create a poem for her 18-year-old sister’s high school graduation, which will be read to friends and family at the gathering.”

Now, let’s see what Chat GPT comes up with writing as Gracie, who has the style of Rupi Kaur, should give us a unique touch.

The result is much more affectionate.

We refer to the sister as the “little sister,” and in general, this poem is of higher quality. This poem is more refined and personal, thanks to the prompts we used.

3. Adjusting the format

We’ve previously explored how to control the format of our text when we restrict the length of our bullet points. It’s a handy technique I frequently use.

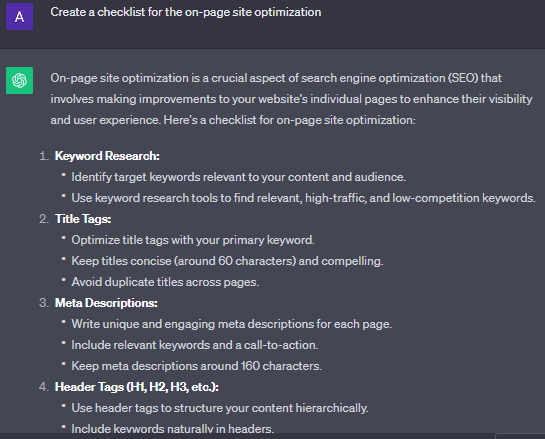

But there are plenty of other formatting options at our disposal. You can indicate if something is a summary, a list, or a detailed explanation. You can even craft checklists.

Let’s dive into how you can achieve this.

I’ll provide an example prompt, and see what happens…

Great! we’ve successfully created a checklist. The list is much longer but you know, I can’t capture the entire text in a single screenshot 🙁

ChatGPT offers a wide range of formatting possibilities; just be clear about the format you want, and it should be able to deliver.

Let’s level up

Awesome, now that we’ve covered some basics, let’s dive into a little more advanced topics related to prompt engineering for natural language processing.

In this section, I’ll talk about two different types of prompts:

- Input and Output (IO) prompting

- Chain of Thought (CoT) prompting

Input and Output (IO) prompting

IO prompting makes use of a pre-trained model’s knowledge about words and how they relate to each other, without needing additional training.

This is the simplest type of prompting, in this you just need to give a single and detailed prompt.

All the prompts we used above in this article were examples of IO prompts.

Let’s look at one more super simple example:

Chain of thought (CoT) prompting

Now, let’s talk about CoT prompting, a technique that takes our interaction with ChatGPT a step further.

While IO prompting involves giving a prompt and receiving a response, CoT prompting requires additional training.

In this method, we enhance our language model by providing specific examples of tasks we want it to perform, giving it a small amount of relevant data to learn from.

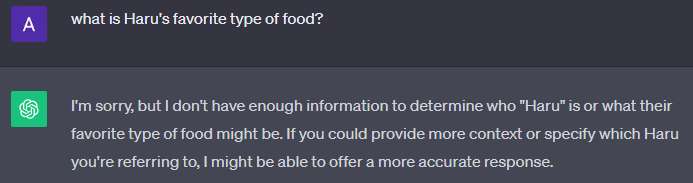

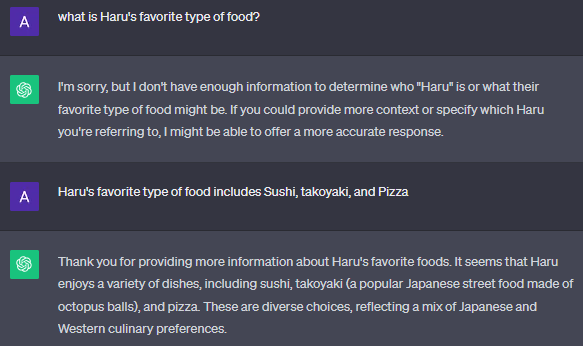

Imagine if it doesn’t know your favorite types of food.

To address this, we can provide a few examples.

Initially, I asked, “What is Haru’s favorite type of food?”

ChatGPT admits it doesn’t know, which is perfectly fine.

Next, we introduce some sample data: “Haru’s favorite type of food includes Sushi, Takoyaki, and Pizza.”

By doing this, we’re giving ChatGPT essential information to work with.

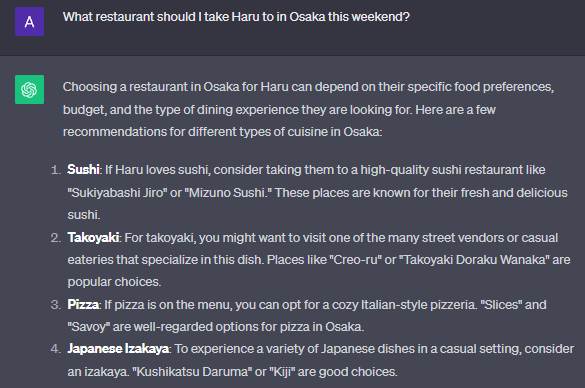

Now, if I ask, “What restaurant should I take Haru to in Osaka this weekend?” the model can ideally understand that my favorite foods are Sushi, Takoyaki, and Pizza.

With this knowledge, ChatGPT can suggest restaurants in Osaka that align with my preferences.

Although the information might be based on data up until January 2022, it still offers valuable recommendations.

This example demonstrates the concept of CoT prompting, showcasing how providing specific examples enables the model to answer complex questions it couldn’t address without adequate training.

CoT prompting is also highly helpful when you have a huge and pretty detailed prompt.

In that case, you need to break the task into multiple sections and try creating shorter prompts for each section.

That way, ChatGPT will process less information at a time and provide better results.

CoT prompting is one of the most commonly used prompt engineering techniques among professional prompt engineers.

Also, please check out the article “This prompt will change the way you use ChatGPT“. it is perfect for beginners

AI hallucinations

Now, let’s talk about something unexpected in the area of artificial intelligence – Hallucinations.

So, what exactly are AI hallucinations?

No, they don’t involve your AI assistants seeing unicorns and rainbows. Instead, this term refers to the peculiar, often erroneous, outputs generated by AI models when they misinterpret data.

A classic illustration of this is Google’s Deep Dream, a project that transforms your photos into bizarre images.

Deep Dream is an experiment that visualizes the patterns learned by a neural network. It has a tendency to over-interpret and exaggerate patterns within images, essentially filling in gaps with its own interpretations.

But sometimes, these interpretations go astray, resulting in peculiar outcomes, which are a prime example of AI models misinterpreting data.

Now, the concept of hallucinations isn’t confined to image models; it can also manifest in text models like ChatGPT.

If it lacks the answer, it might generate a fabricated response, resulting in an inaccurate reply.

You might be wondering why these hallucinations occur. AI models are trained on extensive datasets and rely on their prior knowledge to make sense of new data.

However, occasionally, they make imaginative connections that lead to, let’s say, creative interpretations, and that’s when an AI hallucination takes place.

Beyond their amusing results, these hallucinations offer valuable insights into how AI models comprehend and process data – it’s like peering into their cognitive processes.

Conclusion

Ok! So, we discussed a whole lot of prompt engineering and its vital role in harnessing the power of language models like ChatGPT.

We’ve explored the importance of clarity and personification in crafting effective prompts, as well as the significance of adjusting the format to optimize results.

As we’ve seen, prompt engineering is a key to unlocking the potential of AI, allowing us to interact with language models more effectively and produce meaningful outcomes.

Whether through Input and Output (IO) prompting, Chain of Thought (CoT) prompting, or addressing AI hallucinations, it is clear that understanding and mastering the art of prompt engineering is crucial in our journey to leverage the capabilities of language models to their fullest extent.

As we continue to advance in this field, prompt engineering will remain an indispensable tool, driving innovation and shaping the future of AI-powered communication and problem-solving.

Got any questions? Drop in the comments below!